Training a single neural network can require an volume of water sufficient to fill a nuclear reactor, while our queries to ChatGPT create a carbon footprint comparable to industrial manufacturing. We have uncovered the price our planet pays for our dialogue with artificial intelligence.

When you ask ChatGPT to write a business letter or come up with a recipe, text appears on your screen in a matter of seconds. In reality, at that very moment, in a giant data center perhaps thousands of kilometers away, thousands of powerful processors spring into action. They consume colossal amounts of energy and require millions of liters of water for cooling. The result of your single query is not just a smart answer, but also hundreds of grams of CO₂ released into the atmosphere and dozens of liters of evaporated water. Multiply that by hundreds of millions of daily users, and you have an environmental time bomb.

We marvel at the revolutionary capabilities of large language models (LLMs), yet stubbornly ignore their environmental cost. While the AI industry is growing exponentially, its carbon footprint is becoming less of a phantom and more of a tangible reality.

How Much Does AI Consume?

Researchers have found that training just one third-generation natural language processing algorithm from OpenAI — GPT-3 — required energy equivalent to the annual consumption of 120 American households and led to emissions of 550 tons of CO₂. This is comparable to the carbon footprint of 60 round-the-world flights on a Boeing 747.

The models are only getting more voracious. If GPT-3 contained 175 billion parameters, modern flagship models are approaching the trillion mark. Each new generation requires an order of magnitude more computations, and therefore more energy. Furthermore, their training is not a one-time event, but a continuous process: fine-tuning on new data, creating specialized versions, and experimenting with architectures.

The energy source is also critically important. A data center in a region reliant on coal (as in some parts of Asia and the USA) has a carbon footprint 5-10 times higher than a similar center in Scandinavia, where hydropower dominates. The eco-friendliness of AI turns out to be geographically dependent.

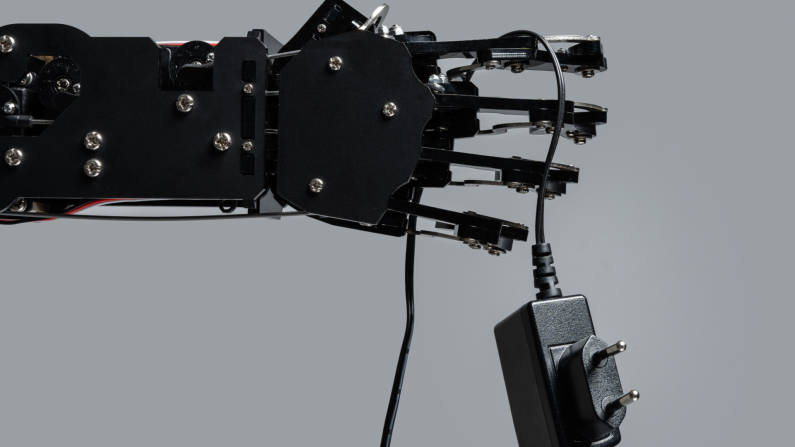

A less obvious but no less critical resource is fresh water, relentlessly consumed by cooling systems. Modern data centers use evaporative cooling: water evaporates, drawing heat away from the servers, and leaves the system permanently. This is efficient, yet wasteful.

Research has shown that Microsoft's data centers alone, used for training and running AI models, consumed approximately 2.5 billion liters of water — the equivalent volume of several hundred Olympic-sized swimming pools. Moreover, a single dialogue with an AI assistant comprising 20-50 questions can "evaporate" up to 500 milliliters of pure water — a standard bottle.

This problem is particularly acute in arid regions. In the US state of Arizona, which has been experiencing a water crisis for many years, building a data center is not straightforward — initiatives face resistance from local residents: tech giants are starting to compete for resources with farmers and the population.

The Path to "Green" AI

The technology community proposes several solutions to make AI's operation more eco-friendly.

Energy-Efficient Algorithms are the most promising direction. Knowledge distillation techniques — an optimization technique for neural networks where a compact model is trained to replicate the behavior of a larger, more complex model, including its output probability distributions and internal data representations — allow for the creation of compact models that perform almost as well as their giant predecessors, but with minimal costs. Quantization — the compression of numerical representations in neural networks — can reduce energy consumption by 4-5 times without significant loss of quality.

"Green" Data Centers are ceasing to be a marketing ploy and becoming a technological necessity. Transitioning to 100% renewable energy is just the first step. Immersion cooling, where servers are fully submerged in dielectric fluid, is 5 times more efficient than traditional systems. Building data centers in Scandinavian countries with natural cooling or even underwater — like the Microsoft Project Natick experiment — shows revolutionary results in energy efficiency. PUE (Power Usage Effectiveness) is a key metric for data center efficiency. The ideal is 1.0 (all energy goes to the servers, zero to infrastructure). The average PUE for terrestrial data centers is around 1.4-1.6. Natick achieved a PUE of just 1.07. This is one of the best indicators in the world.

Transparency and Reporting are key demands from AI companies. So far, only a few publicly report on the carbon and water footprint of their models. Creating standards for "eco-labeling" of AI services would allow users to consciously choose less resource-intensive alternatives.

Usage Optimization is something accessible to everyone. One shouldn't turn to AI for tasks easily handled by a regular search engine. Using lighter models where the capabilities of GPT-4 are not needed is a simple way to reduce the collective environmental footprint.

The environmental cost of artificial intelligence is vast, multifaceted, and will only grow. But this problem is not a death sentence for the technology; it is a challenge that demands a systemic response. For companies, this means genuine, not superficial, transparency, investments in "green" computing, and efficient algorithms. For regulators, it means incorporating the IT sector into strict environmental standards and creating incentives for reducing the carbon footprint. And for users, in turn, it means conscious consumption.

Share this with your friends!

Be the first to comment

Please log in to comment