When, in 2022, the Midjourney neural network generated the work "Théâtre D'opéra Spatial," which won a digital art competition in Colorado, it caused a shock. Many saw this as the sunset of human creativity.

However, a few years later, the hype died down, and it became clear: instead of replacing artists, generative artificial intelligence created a fundamentally new ecosystem in which machine algorithms never became full-fledged authors, but forced many artists to reconsider the concepts of originality and ethics. Yet, the contemporary discourse on creativity and artificial intelligence is already unimaginable without the bright, often surreal images created by a machine from a text prompt.

How AI Generates a New Style

The simplified notion that AI simply memorizes and then combines fragments of training data in different variations is mistaken. Models like Stable Diffusion are trained on massive datasets like LAION-5B, containing billions of "image-text" pairs. The key difference between AI art and previous experiments with computer graphics lies in the machine's capacity for generalization and synthesis. AI does not merely combine ready-made fragments from a database. It identifies deep-seated patterns, abstract concepts, and stylistic techniques to create fundamentally new visual compositions that did not exist in its training dataset.

Although the roots of computer art trace back to the 1960s, when pioneers like Frieder Nake or Michael Noll created the first algorithmic patterns, the birth of generative AI art in its current form is commonly dated to the mid-2010s. The turning point was the emergence in 2015 of GAN technology (Generative Adversarial Network), developed by Ian Goodfellow and his colleagues.

The principle of GAN operation was revolutionary: two neural networks—the "generator" and the "discriminator"—entered into a kind of competition. The generator created images, and the discriminator tried to distinguish them from real photographs or paintings. In this confrontation, the generator learned to produce increasingly plausible and complex visual images. It was GANs that gave the world the first viral example of AI art—the "Portrait of Edmond de Belamy," created by the Parisian collective Obvious and sold at Christie's auction in 2018 for a record $432,500. Thus, algorithms entered the art market.

However, the real boom occurred later, with the spread of diffusion models. If GANs operated on the principle of "rivalry," diffusion models, like those underlying modern Stable Diffusion and Midjourney, operate differently, more subtly. They gradually "corrupt" the training image with noise, and then learn to restore the picture from this noise. As a result, the model grasps the very essence of how structure emerges from disorder. When a user provides a text prompt, the model simply repeats this process of "cleansing" the noise but directs it along the course set by the prompt.

The AI Art Market: From Digital Fairs to Corporate Content

The market for generative art is rapidly evolving. Today, it exists on several planes:

- NFT and Collecting

The peak of hype around AI art as a "new cryptocurrency" has passed, giving way to a niche market. Purchasing value now lies not in random pictures, but in works created by established digital artists who use AI as one tool in their arsenal, bringing curation and artistic intent to the process.

- Corporate Content

This is the most massive and financially significant segment. Companies use models like DALL-E 3 (integrated into ChatGPT) or Midjourney for rapid prototyping of visual concepts, creating illustrations for internal presentations, and generating ideas for advertising banners. This reduces dependence on stock images and speeds up the production of various visual content.

- Exhibitions and Institutional Recognition

For example, the Museum of Modern Art (MoMA) in New York has already held exhibitions featuring works created with the help of AI. One of the landmark exhibitions that attracted widespread attention was "Unsupervised: Machines Vision Art," which opened at MoMA in late 2022. Artist Refik Anadol used a machine learning model to analyze and interpret nearly 200 years of the museum's collection. The AI generated abstract, constantly changing visual flows that were not a copy, but rather the machine's "vision" of the entire history of modern art, processed through its algorithmic consciousness. The reaction was mixed: some critics and viewers were fascinated by the hypnotic beauty of the installation, seeing in it a dialogue between the human history of creativity and its digital future. Others, however, questioned whether this project was more of a technological attraction rather than a profound artistic statement, noting that the emotional depth of human experience was replaced by cold, albeit complex, statistical processing.

Parallel to this, in 2023, the London gallery Gagosian Gallery, as part of the Frieze fair, presented the large-scale project "The Great Madness," created by artist Mario Klingemann in collaboration with AI. The work was a generative portrait of a crowd, where people's faces were constantly distorted, melted, and transformed, creating an alarming image of the collective unconscious. This installation provoked sharper controversy. Viewers noted that the work evoked a sense of discomfort and alienation, which, however, was the artist's intention. Critics were divided: some praised Klingemann for the precise use of AI to visualize contemporary social anxieties, while others accused the project of inhumanity and technical fetishism, where the message drowns in the demonstration of the algorithm's capabilities.

The reaction of the art community and the public to these exhibitions allows us to identify several persistent theses:

- The Changing Role of the Artist. Museums now value not the prompt engineer, but the artist-curator who formulates the concept, prepares the data, guides the model, and imbues the final result with meaning. Museum curators now speak not of "AI works," but of "works by artists using AI."

- Debates about the Sincerity of Such Art. Many viewers in reviews and on social media continue to express skepticism, arguing that AI-created paintings "have no soul, it's just a pretty picture." This emotional response, or lack thereof, remains the main barrier to the full acceptance of AI art by the mass audience.

- Institutions as Arbiters. The very fact that museums like MoMA provide their platforms serves as a powerful filter. They select not random images, but projects in which technology is a tool for meaningful exploration of fundamental questions: what is creativity, memory, perception, and how are they transformed in the digital age.

The Front Line: Scandals and Ethical Protests

Thus, the flowering of technology has provoked a powerful wave of resistance from the art community. The platform DeviantArt faced a user boycott after introducing a generative function trained on data without the explicit consent of the authors. The key complaints can be reduced to three points:

Non-Consensual Training. Artists accuse companies of using their works to train commercial models without their permission or compensation. A large-scale class-action lawsuit against Stability AI, Midjourney, and DeviantArt from a group of artists, including Sarah Andersen, Kelly McKernan, and Karla Ortiz, became a symbol of this struggle. The plaintiffs claim that the models learned to create works in their unique style, effectively appropriating the result of their years of labor.

Style Plagiarism. Users demonstrate that by using prompts with the names of living artists (e.g., "in the style of Greg Rutkowski"), it is possible to generate images indistinguishable from their original works, undermining their economic foundation.

Fair Use. Developer companies appeal to the doctrine of "fair use," arguing that training on publicly available data is a transformative act. Their opponents insist that the commercial use of an artist's style without their consent constitutes copyright infringement.

The New Ethics of Authorship: Where is the Line of Collaboration

Attempts to recognize AI as a full-fledged author are failing. Courts in various countries, including the United States, consistently refuse to register copyright for works created without "creative intervention" by a human. The key concept becomes "creative intent."

Authorship remains with the human who:

-

Formulates the prompt, saturating it with concepts, context, and references.

-

Performs iterative selection and "seeding," guiding the neural network towards the desired result.

-

Conducts post-processing and final refinement of the image in graphic editors.

The most illustrative precedent is that of the graphic novel "Zarya of the Dawn." In 2022, author Kristina Kashtanova received a copyright registration for this book, but the Office later partially annulled it, leaving registration only for the text and layout of elements, but revoking protection for the images generated by Midjourney.

In its explanation, the US Copyright Office clearly stated its position: copyright protects only works created by the human mind. It was determined that Kashtanova, although she composed detailed text prompts, could not predict or fully control the final output produced by the AI. The generated images were deemed a "product of a machine," not the "intellectual conception" of a human, and therefore ineligible for protection. This case became a benchmark and is cited in all subsequent disputes.

An even more radical attempt was made by Stephen Thaler, a scientist and inventor who for several years tried to patent and register copyright for works created by his AI system DABUS. He explicitly listed the AI as the sole creator and author.

His application to register the image "A Recent Entrance to Paradise," created by DABUS, was rejected. In 2023, the US Federal Court for the District of Columbia upheld the legality of this refusal. Judge Beryl Howell emphasized in her decision: "Copyright is designed to protect the fruits of intellectual labor that stem from the human mind." The court ruled that "creativity" in the understanding of US Copyright Law and the Constitution is an exclusively human prerogative, and non-human entities cannot be rights holders.

The ethics of the new era demand transparency. Labels such as "generated with AI" are increasingly appearing in work descriptions, and communities are developing standards for indicating the models and prompts used.

Future Trends

The future of AI in creativity lies not in creating a single, all-powerful model, but in the realm of hyper-personalization.

- Personal LoRA Models (Low-Rank Adaptation). Artists can now train compact and inexpensive adapters based on their own works. This allows them to create a "digital double" of their style, which can be used to generate new images while remaining within a unique visual language. This is simultaneously a tool for style protection and a powerful means of scaling it.

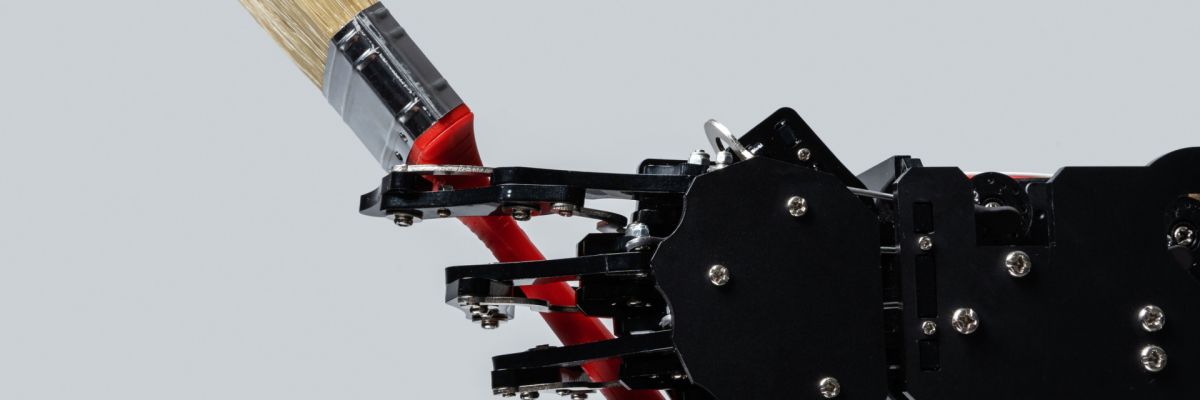

- Partnership. AI is becoming an ideal partner for brainstorming. Designers use it not to get a finished picture, but for rapid visual exploration: "show me 50 variants of a chair design in the Bauhaus style, but using biomaterials." This speeds up the process of iterating through possibilities, leaving the final meaningful choice and refinement to the human.

- Contextual Co-Creation: AI as an "Active Canvas." If earlier interaction with AI resembled a dialogue with a black box—send a request, get a result—now interactive co-creation technologies are gaining strength. This is not just about generating variants, but about working in a unified environment where AI adapts in real-time to the artist's actions. Platforms like Runway ML are already implementing features where an artist can start with a sketch or a rough composition, and the AI will suggest options for its stylization, detailing, and completion based on the context. For example, an interior designer makes a sketch of a room, and the AI immediately suggests several lighting options, wall textures, and furniture arrangements that harmonize with the original sketch. This turns AI from a generator into an "active participant" that does not just output static images, but engages in a visual dialogue, offering ideas that the artist can accept, reject, or develop without interrupting the workflow. This trend blurs the line between stages: idea, sketch, and final visualization now occur in a single, continuous flow with AI's participation.

- Proactive Creativity: AI that Studies and Anticipates Tastes. The next logical step after personal style models (LoRA) is the development of systems that learn not only from the artist's works but also from their creative habits, aesthetic preferences, and unfinished projects.

This can be called "proactive creativity." A neural network integrated into the artist's digital workspace (e.g., a graphics tablet or editor) analyzes their actions: which brushes they most often choose, which color palettes they prefer, which sketches they leave unfinished and why. Over time, such a system begins not just to respond to requests, but to actively propose solutions that, with high probability, will correspond to the author's internal intent.

For example, while working on concept art for a fantasy character, an artist might receive a suggestion from the AI: "Based on your past works with armor and your preference for asymmetrical forms, I have generated three variants of shoulder pads that might suit this sketch. Also, you often stopped at the stage of working on skin textures—shall I suggest several options?"

This turns AI from a tool into a kind of "creative assistant" that deeply understands its owner's unique style and methodology and helps overcome creative blocks by offering ideas that seem surprisingly appropriate and personalized.

Of course, generative AI has not given birth to new, artificial artists in the form of neural networks, but it has provoked a reassessment of the very essence of creativity. It has acted as a catalyst, forcing a rethinking of the value of human conception, the boundaries of style, and the necessity of a new "digital ethics" in art.

Share this with your friends!

Be the first to comment

Please log in to comment