Computers are often compared with the brain. Recently, these biological and mechanical systems have become connected. What opportunities does this technology provide and what challenges does it pose to humanity?

Technology plays an increasingly important role in our lives. What seemed incredible only a few years ago is a necessity today. It is impossible to do without it in everyday life. The idea of connecting a living human brain to a computer sounds intimidating and may be incomprehensible to some. But as long as there are people in need of this technology, it is only a matter of time until it is widely adopted.

What is a neural computer interface?

A neural computer interface is a system which directly exchanges information between the brain and an electronic device. In a broad sense, it can be understood as artificial equivalents of the sense organs (for example, a bionic eye) and other devices. These devices work in one direction and have been used in medicine for a long time.

Multi-directional interfaces are of much greater interest. They allow both the mind and external devices to receive signals and serve as their source. Such integrated communication between the brain and the outside world has long been dreamed of in science fiction, and more recently by scientists.

Such interfaces work by registering the electrical activity of the brain. Our thoughts and desires are displayed on an electroencephalogram (EEG), which is analyzed by a computer.

A brief history of neural interfaces

As we have already found out, neural computer interfaces are inextricably linked with EEG. Therefore, in order to understand how humanity came to the idea of connecting the brain to a machine, it is necessary to trace the history of the development of this technology.

The history of Electroencephalograms.

The EEG was developed in Germany by a physiologist named Emil Heinrich Dubois-Reymond. We owe the existence of electrophysiology to this man.

In 1849, Dubois-Reymond concluded that the human brain has electrogenic properties. This is the starting point in the study of electrogenesis - the ability of the brain to generate electrical current. The scientist also discovered some patterns for the first time that characterize electrical signals in human nerves and muscles.

Similar questions were of interest to the English scientist Richard Caton. In 1875 he showed that the brains of rabbits and monkeys generate electricity.

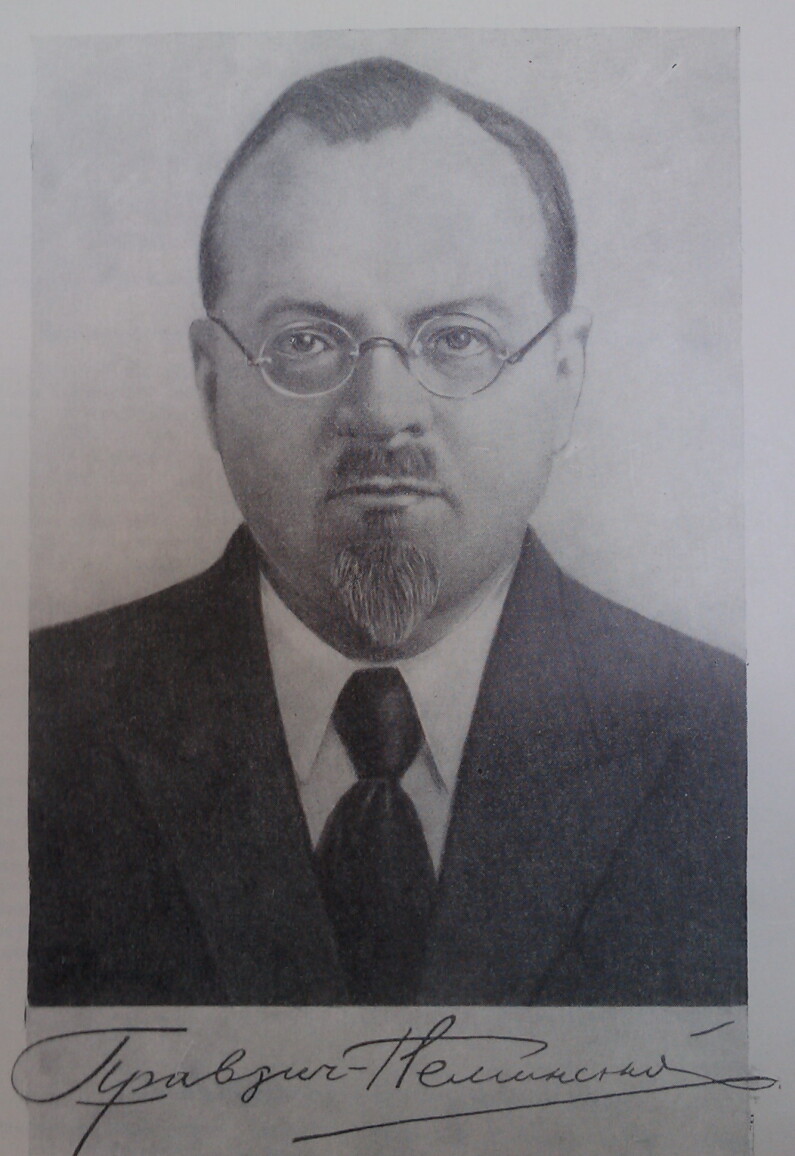

The Russian scientist Vladimir Pravdich-Neminsky played a huge role in the development of EEG. He managed to do the first electroencephalogram in history. The discovery was made in 1913 at the University of Kiev. A dog was used as the physiologist's patient. It was important that the animal's skull remained intact because the EEG was done using a string galvanometer.

The professor’s achievements did not end there: he is the author of the term “electrocerebrogram” and was the first to classify the frequencies of the electrical activity of the brain. In addition, Pravdich-Neminsky uncovered Alpha and Beta wave rythyms in animals.

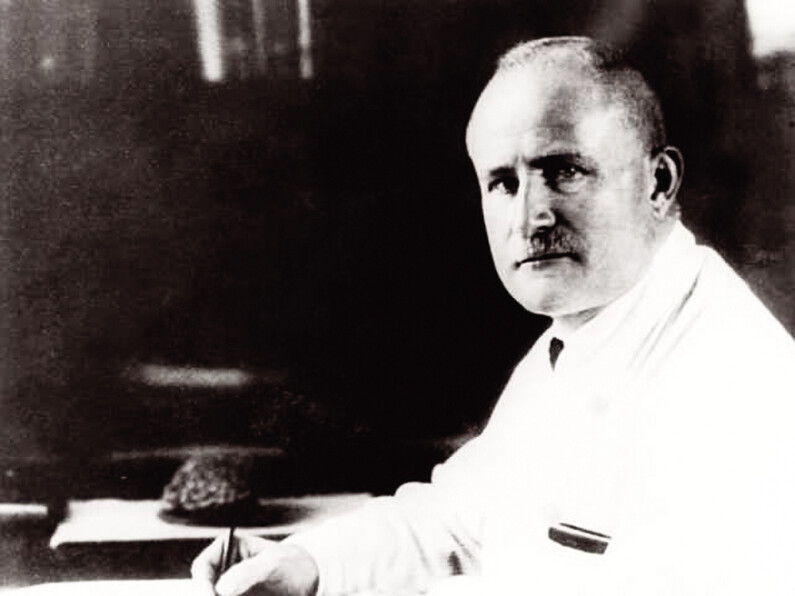

Modern EEG technology would not exist without the German psychiatrist Hans Berger who was able to obtain a wave pattern of the electrical activity of the human brain in 1924. The same galvanometer that was used to read brain signals from a patient’s scalp helped him with this. They were named "Berger waves" (alpha wave rythyms in the range of 8-12 Hz) in honor of Hans Berger.

An experiment with a bull; the point of no return

The idea of combining a brain and a computer was first envisioned by Yale University professor Jose Manuel Rodriguez Delgado. He invented the stimoceiver, an implantable device in the brain that is controlled by radio signals.

In 1963, he implanted a stimoceiver into the brain of a bull and was able to influence the animal's behavior by remote control. This event made a great impression on society and became the starting point for the development of neural interfaces as we know them today.

The 1960's and 1970's were a turning point. Both the military and scientists from civilian fields, such as medicine, became interested in the possibility of connecting the brain and a computer. In 1972, doctors learned how to convert sound into an electronic signal, thereby creating the world's first hearing aid. The following year, the term “neural interface” became part of everyday life.

Scientists continued to do experiments on animals until the end of the 1990's by connecting their brains to various devices. In the course of the experiments, the experiments were able to return function to lost sense organs or even lost motor skills. Eventually, a neural interface was implanted in humans for the first time in 1998. The patient, Johnny Ray, had survived a stroke and was unable to move.

Ray learned to control the movement of a cursor using the power of thought with the help of a chip implanted in his brain. Thus, a person cut off from the outside world was again able to have the opportunity to interact with others.

In recent years

In 2004, a similar operation was performed on a man named Matthew Nagle. Over the previous six years, the capabilities of neural interfaces had grown and Matthew was able to access his e-mail, play simple video games, and even use drawing programs. The patient also learned to switch channels, control the TV volume, and even use a mechanical arm.

Also in 2004, an artificial silicon chip was created in Cleveland. This material was able to connect functioning neurons with inanimate matter. Thus, a reliable multi-directional connection could be established between organic cells and transistors. Even the slightest change in electrical charge could be detected.

In 2008, the first commercial neural interface appeared. As a result, people really started talking about this technology. Interest has continued to grow and many experts believe that neural interfaces will become a major technology of the 21st century.

This opinion is shared by billionaire Elon Musk. He created the company Neuralink in 2017 which is doing work in this area. The plans of the well-known businessman are to implant devices into paralyzed people that will provide them with full access to the Internet. Other companies are trying to achieve similar goals.

With today's fast wireless Internet, such plans do not seem so fantastic. Neural interfaces will allow people with disabilities to freely browse web pages and communicate with their relatives, or even control robotic devices that will give motor activity back to them. The billionaire Musk, as well as other enthusiasts, are striving for this goal.

Computer brain interface: types and features

There are two main ways of classifying neural interfaces: by the method of giving commands (active, reactive, and passive) and by the way users interact with the system (invasive and non-invasive).

Although it should be understood that any classification method is conditional. And you could list many more subspecies due to the individual characteristics of a neural interface. For example, you can focus on the goal of the device and identify it as medical, military, or for entertainment. But we will not dwell on such details. It would be better to focus on the technical features of the devices, and classify them in this way.

Active neural interfaces

Active neural interfaces are classified as such when the user independently initiates a command. For example, the patient imagines how he makes some movement and the system reads the signals of his brain and interprets them into specific actions.

Such devices are widely used in the medical field, for example, to repair damaged limbs or to learn how to use a prosthesis.

Not surprisingly, gaming and competitive features have a beneficial effect on rehabilitation. The video below shows how two patients try to force a bottle to pour into a cup on their side. So, they can have fun and it can be useful.

The crowning achievement of active neural interfaces is the brainchild of the ExoAtlet project which already gives handicapped people the ability to move around without assistance.

Reactive neural interfaces

In reactive neural interfaces, a user command is initiated in response to a system action. A classic example of reactive neuro interfaces are the communication systems created based on the “P300 effect”. They work by reading the electrical reaction of the brain to the letters of the alphabet that appear on a screen. The user can type messages by counting the number of flashes of the desired letter.

Passive neural interfaces

In passive neuro computer interfaces, the user does not issue any commands. The system operates independently. Such devices can be used in the medical field, for example, to assess cognitive load. The patient is invited to perform an action (for example, to solve a problem) while doctors monitor his brain activity.

Invasive neural interfaces

Invasive neural interfaces are those that involve the direct implantation of electrodes into the patient’s brain. At the moment, such devices are not very common. They are being tested on animals, for the most part. In the future, such systems will gain popularity with the development of medicine and the growing desire of man to return activity to paralyzed people.

Sometimes partially invasive neural interfaces are put into a separate category. They involve electrocorticography – that is, the placement of electrodes on the surface of the brain. Such procedures are highly risky and are not performed frequently. They are used only for medical purposes.

Non-invasive neural interfaces

This type of neural interface is widespread today and will remain relevant in the future. The reason for this is their simplicity. Non-invasive devices involve reading brain activity exclusively by external devices. This includes the electroencephalography already mentioned above, near-infrared spectroscopy, and other non-hazardous methods.

Exocortex

It is worth looking at the term "exocortex". It comes from two words: the Greek "exo", or outside, and the Latin "cortex", or tree bark. In this case, the bark means the cortex of our brain.

Exocortex is any external system used to improve the capabilities of our natural cortex, the mind. In a broad sense, this term can be understood as a calculator, the Internet, or even a sheet of paper and a pen. In the context of our discussion of neural interfaces, an exocortex should be understood as an external computer connected to our brain and designed to increase its capabilities.

DARPA became interested in the possibility of increasing human capabilities by using a computer connected to the brain in the 1960's. It was believed that artificial intelligence was not able to reach such an advanced level at that time.

With the development of brain-computer interfaces, exocortexes will become a reality. Interestingly, not only with a computer. It can also be used with another brain as an external computing device. Some scientists believe that this technology could lead to the transfer of human consciousness into a robot, or even the union of several people into one superorganism.

What's next?

The human brain is a complex structure to study, so the development of neural interfaces is not progressing as fast as we would like. Nevertheless, science has made significant progress in this industry, and interest in it has only grown in recent years.

The introduction of such devices is a long and laborious process. But the more companies are connected to it, the more accessible devices will become and the more funds will be allocated for basic research. They, in turn, will make an even greater contribution to the industry.

As a result, more and more people in need will be able to receive long-awaited help. And our civilization will take another great step forward.

Share this with your friends!

Be the first to comment

Please log in to comment