Norwegian developers have trained a neural network to swap real people's faces for anonymous computer-generated versions. This technology will help preserve the privacy of people in photos.

Writers of news articles and investigative reports often consciously change the names of the people in their stories. This can be done to fulfill the wishes of the people in the story or to conceal the details of a criminal investigation.

With text, it’s often enough to simply change a name. But in order to preserve the privacy of people in photos or videos, their faces need to be pixelated or covered with black rectangles, and the pitch of their voice should be transformed to avoid identification.

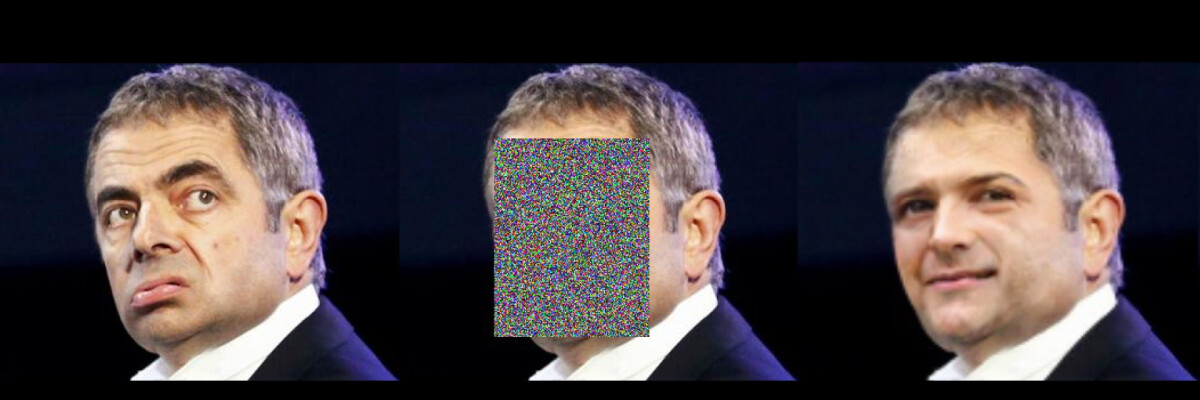

Several Norwegian developers have proposed a method of transforming photos that allows users to replace real people’s faces with versions generated by a neural network. The new technology will conceal people’s identities without overlaying the images with ugly black rectangles or blurring their faces. Viewers are usually more interested in the message than the character’s appearance. This is similar to changing names, with realistic computer-generated masks serving as pseudonyms.

A group of developers from the Norwegian University of Science and Technology have compiled several image processing algorithms into a united neural networks, which was trained on a dataset of 40 million photographs during 17 days. The original code of the program has been shared on GitHub.

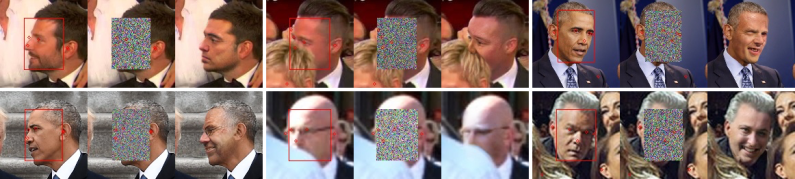

There are several steps to replace a face on a photograph. At first, the initial image is processed by a S3FD neural network which marks rectangular areas with faces on the photograph. Then, the Mask R-CNN neural network identifies key points in these areas: eyes, noses, ears, shoulders. After identifying these key points, the faces are colored in gray, and the pixels are then colored randomly. The final step - creation of new faces - is done by the U-Net neural network. It receives anonymized images, which it can then overlay with new faces according to the key points.

Most notably, the neural network does not save the initial images with the original faces, so it does not violate the General Data Protection Regulation (GDPR) which defines the protocols of working with personal user data in Europe.

Share this with your friends!

Be the first to comment

Please log in to comment