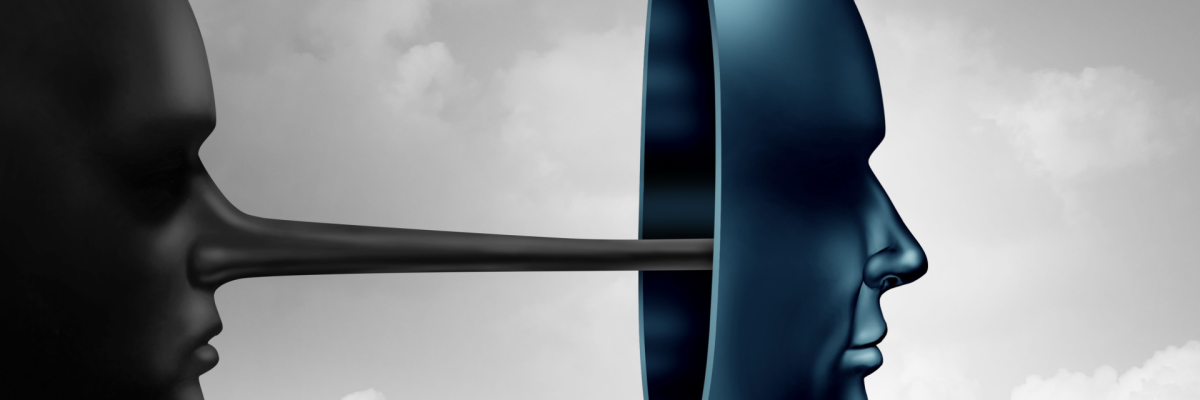

The era when we could trust our eyes and ears is rapidly fading. The culprit? Deepfakes – hyper-realistic video and audio forgeries crafted using artificial intelligence.

They can be used for all sorts of purposes, from harmless entertainment to large-scale information attacks and international scams. In this article, we’ll explain how to spot this neural network evil, how serious the threat is, and which technologies you can use to outsmart the crooks!

What is a Deepfake, and Why Does It Exist?

But first, a little theory and some explanations for those who aren’t familiar. A deepfake is a technology that creates fake video and audio recordings in which one person’s face or voice is replaced with another’s. Deepfakes are based on neural networks that are trained on vast amounts of data (photos, videos, audio recordings), allowing them to generate incredibly convincing forgeries. In other words, AI analyzes the features of the original person’s face, expressions, voice, and movements, and then transfers those characteristics to another face or voice.

In the past, deepfakes were the domain of advanced specialists and required immense computing power. Today, however, there are relatively easy-to-use programs and online services that allow almost anyone to create a deepfake. This has been made possible by the development of open-source projects and improvements in machine learning algorithms. Logically, as the technology has become more accessible, cases of its misuse have also increased.

So, besides entertainment (the original purpose of deepfakes, like the joke of “try on the image of your favorite celebrity”), deepfakes can be used for the following unpleasant and dangerous purposes:

-

Political Disinformation: Deepfakes can be used to spread false news, compromise political figures, and create fake statements. This can influence public opinion and even election results. The most striking example is the 2018 video in which former US President Barack Obama supposedly makes insulting remarks about Donald Trump. And that was seven years ago! Even then, few people could distinguish the video from the real thing. Imagine what AI is capable of today!

-

Financial Fraud: Deepfakes can be used to forge video conferences and voice messages to deceive investors. There are also frequent cases of extortion, where the video asking for financial assistance is not a relative of the victim at all, but another person with their face. For example, in 2019, fraudsters in the UK used a deepfake to forge the voice of the CEO of an energy company and convinced an employee to transfer $243,000 to their account.

-

Pornography: Deepfake videos involving celebrities (or ordinary people) without their consent are a serious violation of privacy and human rights. In 2020, there were several similar incidents when deepfake porn allegedly featuring actress Emma Watson was circulated.

-

Cyberbullying: Nothing prevents experienced neural network users from creating some compromising photo with a “detractor” and spreading it on social networks for the purpose of bullying, which in turn can cause psychological trauma to the victim and even lead to suicide.

Behind the Scenes of Digital Illusions: How Deepfakes Are Created

The process of creating a deepfake involves several stages that require a certain level of technical knowledge and computing resources. At the heart of this process is the use of artificial intelligence, in particular, neural networks. In steps, it looks like this:

-

Data Collection: The first and perhaps most important step is data collection. You need to collect as many photos and videos as possible of the person whose face will be used in the deepfake (the so-called “target” face). This data is needed to train the neural network. The more data, the more realistic the result will be. At the same time, all privacy laws are usually already violated, so most deepfakes can be considered illegal.

-

Neural Network Training: The neural network, usually a complex structure of many layers, analyzes the facial features, expressions, head movements, and other characteristics of the target face. At the same time, the neural network is trained on the data of the face that will be replaced (the “donor” face). During the training process, the network identifies patterns and learns to recreate the target person’s face based on the donor’s face data. This process can take hours or even days, especially if the amount of data is large and high-quality results are required.

-

Face Replacement: After training, the neural network proceeds to directly replace the donor’s face with the target person’s face in the video recording. It analyzes each frame of the video and generates a new image in which the donor’s face is changed to the target’s face, while maintaining the lighting, viewing angle, and other parameters. This process requires significant computing resources, and graphics processing units (GPUs) are often used to speed it up.

-

Post-processing: At the final stage, the deepfake undergoes post-processing to improve image quality, smooth out artifacts (so-called errors that occur during the hallucination process, such as six fingers on a hand instead of five), and make the forgery even more realistic. Post-processing may include methods for improving color, sharpness, removing noise, and adjusting lighting. The goal of this stage is to make the deepfake indistinguishable from a real video.

To create complex and high-quality deepfakes, generative adversarial networks (GANs) are often used. GANs consist of two neural networks: a generator and a discriminator. The generator creates fake images, and the discriminator tries to distinguish them from the real ones. The generator is constantly improving to deceive the discriminator, and the discriminator is constantly improving to expose the generator. As a result of this “game,” GANs learn to generate very realistic deepfakes. Research in the field of GANs is actively being conducted in scientific centers around the world, which leads to a continuous improvement in the quality of deepfakes.

Playing Detective: How to Spot a Deepfake

Despite all this and the perfection of modern AI, it is still possible to recognize their product, although it is difficult. First of all, you will need your attentiveness and critical eye to assess:

-

Unnatural Facial Movements: Pay attention to facial expressions, blinking, and lip movements. In deepfakes, they often look unnatural, jerky, or too smooth, and the mouth movements don’t match the words. Also, the eye movements may be out of sync with the head movements, and the facial muscles may not be working correctly. Pay particular attention to the emotions of the person depicted and how they manifest. For example, it is almost impossible to frown strongly and smile at the same time.

-

Blur or Artifacts: Blurriness, distortions, irregularities, or other artifacts may be noticeable in the area of the face, especially around the edges of the face and hair. This also applies to hands and the ends of clothing, such as collars, dress hems, and belts. Artifacts are usually caused by poor-quality source data or imperfect processing algorithms.

-

Inconsistent Lighting and Shadows: Pay attention to how light falls on the face and body. In deepfakes, lighting and shadows may not match each other. For example, the sun is shining from the right, but the shadow is not on the left, but also on the right, and in addition, it is the wrong shape.

-

Poor Quality Sound: Pay attention to audio artifacts, such as hissing, crackling, or echoes.

-

Unnatural Skin Tone: The color of the skin on a person’s face may differ from what it is on their hands, or what the person usually has.

-

Context and Source: Finally, be sure to check the source of the information that published the video. If it’s a video from social media, you should immediately treat it with suspicion. Check the information in major media outlets and news.

Recently, a number of research groups have made significant progress in developing technologies capable of detecting deepfakes with high accuracy. For example, a group of researchers at Carnegie Mellon University has developed the MISLnet system, which can analyze everything listed above instead of you, breaking down the image literally into pixels and tracing the relationship between them. This advanced algorithm allows you to recognize deepfakes with an accuracy of up to 98%.

You can also use the following services to check a file for authenticity:

-

Deepware Scanner: A service developed by Sensity AI that analyzes videos and images using machine learning methods to identify signs of forgery.

-

InVID & WeVerify: Tools developed for journalists and fact-checkers that allow you to analyze videos, identify suspicious elements, and verify their origin.

-

Microsoft Video Authenticator: A tool developed by Microsoft that analyzes videos to identify signs of forgery, such as inconsistencies in lighting and facial expressions.

-

FotoForensics: An online service that uses various methods of image analysis to identify signs of editing and manipulation.

The threat of deepfakes is constantly growing, requiring us to be more vigilant. According to a report by Sensity AI in 2023, the number of deepfake videos detected on the web has increased by 123% compared to the previous year, indicating a growing need for new protection methods. Moreover, according to a study by the University of Southern California, only 48% of participants were unable to distinguish an AI-generated face from a real one. In other words, almost half of the people could not correctly determine which face was real and which was a deepfake, which means that all these people are easily misled.

Companies, in order to protect themselves from the use of their materials for malicious purposes, use watermarks and cryptographic protection that confirms authenticity. For example, Adobe is developing the Content Authenticity Initiative system, which allows you to add cryptographic signatures to digital materials in just a few clicks. Blockchain technologies also help in the fight against fakes, as well as technologies that read physiological signals from those depicted in the video, such as, for example, breathing rate (is there any breathing in the video at all, is it natural, etc.).

Deepfakes are a serious threat to society that can be used for disinformation, fraud, and manipulation. However, thanks to the development of technology and increased user awareness, we can effectively combat this threat. It is important to remember that critical thinking, caution, and the use of tools to verify the authenticity of information are our main allies in this war. The battle for truth in the digital age is just beginning, and you should not rely on technology alone and wait for scientists to finally invent a “cure,” because this is unlikely to happen, because AI is developing by leaps and bounds and easily outpaces any anti-means. So don’t forget about your main weapon - attentiveness and a sharp analytical mind!

Share this with your friends!

Be the first to comment

Please log in to comment